AI, especially in the realm of large language models (LLMs), is rapidly reshaping industries, from legal services to healthcare. However, it's important to recognize that these systems, often built as probabilistic models, are not flawless. They predict outcomes based on the data they've been trained on, which sometimes leads to mistakes, commonly known as hallucinations—where AI confidently provides incorrect or nonsensical information.

AI, especially in the realm of large language models (LLMs), is rapidly reshaping industries, from legal services to healthcare. However, it's important to recognize that these systems, often built as probabilistic models, are not flawless. They predict outcomes based on the data they've been trained on, which sometimes leads to mistakes, commonly known as hallucinations—where AI confidently provides incorrect or nonsensical information.

The Hallucination Problem: Why It Happens

Hallucinations occur because LLMs are probabilistic in nature, meaning they predict the next token based on the likelihood of it fitting within the context of previous tokens. This probability-based approach can misfire when different potential outcomes are too close in likelihood, resulting in the model generating information that isn't grounded in reality or human logic.

"AI hallucinations are a byproduct of these probabilistic models, which don't always understand the full context in the way a human would," explains Bryan Lee, CEO of Ruli. "At Ruli, we've focused on reducing this issue by enhancing how AI processes data, introducing real-world guardrails, and pairing your knowledge base with our AI platform to ensure the output is more aligned with user expectations."

How Ruli Solves the Problem

To combat hallucinations and improve the reliability of AI outputs, Ruli uses several key innovations that set it apart from other solutions:

Retrieval-Augmented Generation (RAG): Ruli's system doesn't rely solely on the AI's training data. Instead, it combines internal knowledge with external verified sources during the reasoning process, ensuring that the AI can reference real-time, accurate information. This step significantly reduces the risk of the model fabricating responses.

Think of RAG like a lawyer with a smart legal assistant. When asked a complex question, the lawyer sends the assistant to quickly find the most relevant case files or legal documents. Then, using that information, the lawyer crafts a tailored, well-informed response. In RAG, the Ruli AI does both —the assistant's job of retrieving information and the lawyer's job of creating a thoughtful answer from it.

Isolated Data Environments for Privacy: Unlike many solutions that process data in multi-tenancy environments, Ruli uses isolated environments to process the data. This protects sensitive client information and ensures that data remains secure and private, a crucial differentiator in industries like legal and finance.

Optimized Data Pipeline: Ruli has built a highly flexible and optimized data pipeline capable of extracting quality information from a wide variety of unstructured sources. This pipeline ensures that the AI is fed with the most relevant and accurate data, which improves the quality of its outputs and reduces errors.

Frictionless User Experience: Ruli's interface is designed for intuitive user interactions, allowing professionals to engage with the AI effortlessly. Whether it's legal teams or business leaders, users can provide feedback and make adjustments directly in the app, which is then fed back into the model to improve future interactions.

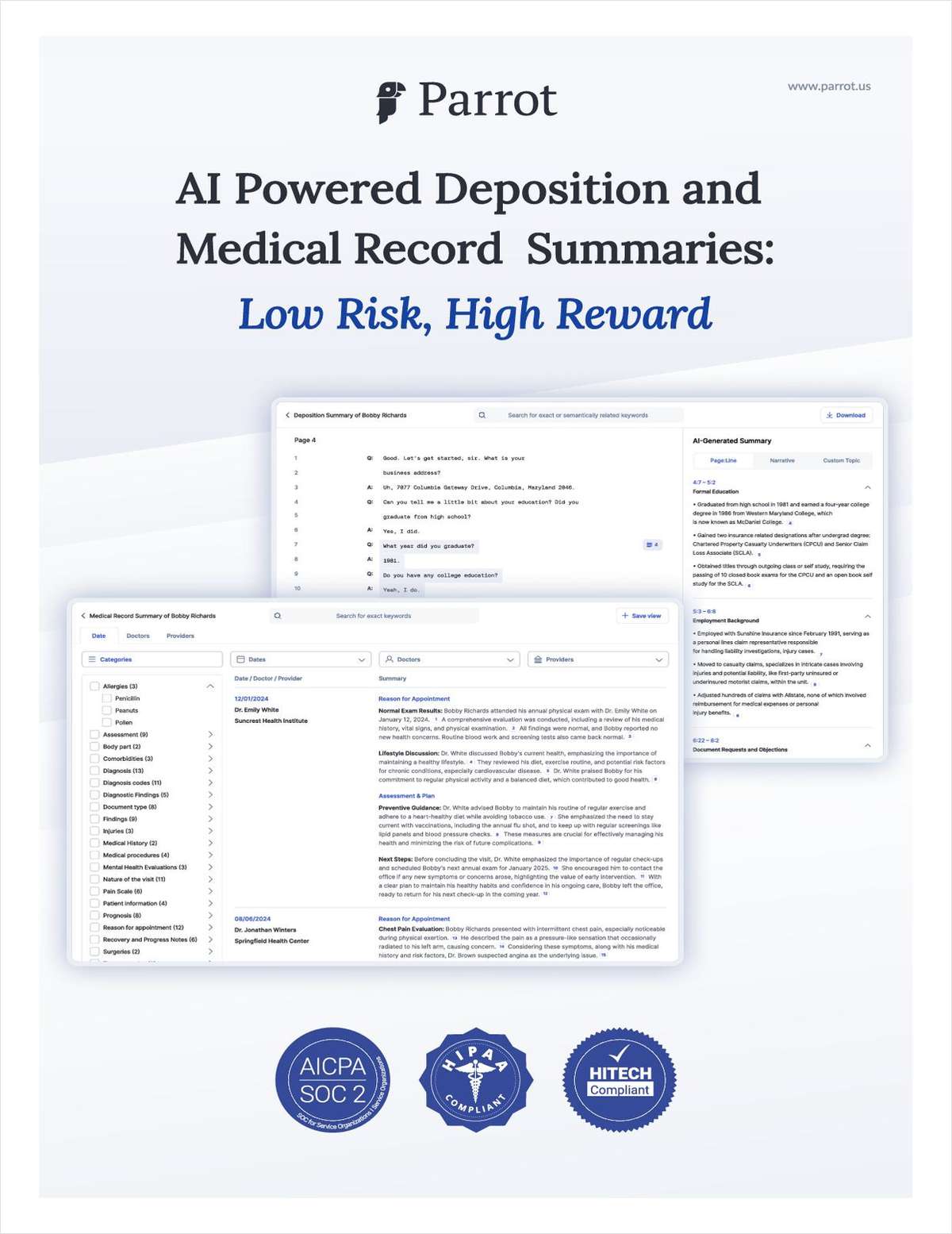

Clarity Through Citations and Confidence Scores: Ruli goes beyond simple AI outputs by offering citations and references alongside AI-generated results, ensuring users understand the origin of the information. In addition, Ruli provides proprietary confidence scores, helping users gauge how reliable a particular AI response is. These features bring transparency and clarity to AI, something that many other platforms lack.

Reinforcement Learning from Human Feedback (RLHF): While still in development, Ruli is building RLHF capabilities that will allow the model to improve over time based on human feedback. Once fully integrated, users will be able to provide real-time corrections and insights, ensuring the model continues to evolve and refine its accuracy. "This scalable training pipeline is crucial to ensuring the AI's long-term growth and adaptability" adds Xi Sun, CTO of Ruli.

Going Beyond the Chatbot: The Power of Merging AI with Good-old Software

The future of AI lies not in relying solely on probabilistic models, but in blending them with the reliable precision of good-old deterministic software solutions to provide accurate and meaningful insights. At Ruli, we focus on this hybrid approach, going beyond basic chatbot functions and integrating AI with practical, real-world applications.

For example, Ruli offers advanced features like Compare Redline, which provides executive summaries of document changes, and Multi-Docs Data Table Extraction, which enables users to efficiently extract structured data across multiple documents by asking simple questions of what they want answered. By incorporating tools that offer clarity and context directly into AI analysis, we dramatically reduce the potential for hallucinations and provide more reliable outputs.

In essence, Ruli differentiates itself by not just applying AI for the sake of automation, but by ensuring that AI's insights are clear, accurate, and actionable. "We've learned that AI works best when it's got a human touch – that's why Ruli combines intelligent systems and real-time feedback for results you can trust" concludes Bryan Lee. "At Ruli, we've created a solution that delivers more than just chat predictions—it provides confidence and context, coupled with automated experiences that enable users to tap into the full potential for their knowledge base for tailored, more reliable results."

By marrying probabilistic AI with deterministic, user-driven experiences, Ruli brings the future of AI-powered insights into industries that require precision and trust.

Ready to experience the future of AI-powered legal solutions?

Visit Ruli.ai to learn how we can help you leverage AI with clarity, confidence, and privacy in every decision.