Controversy Continues Over Planned Use of Facial Recognition Tech in NY Public Schools

The New York Civil Liberties Union challenges the planned use in Lockport, New York, while school officials say they recognize the importance of safeguarding privacy.

January 08, 2019 at 09:30 AM

5 minute read

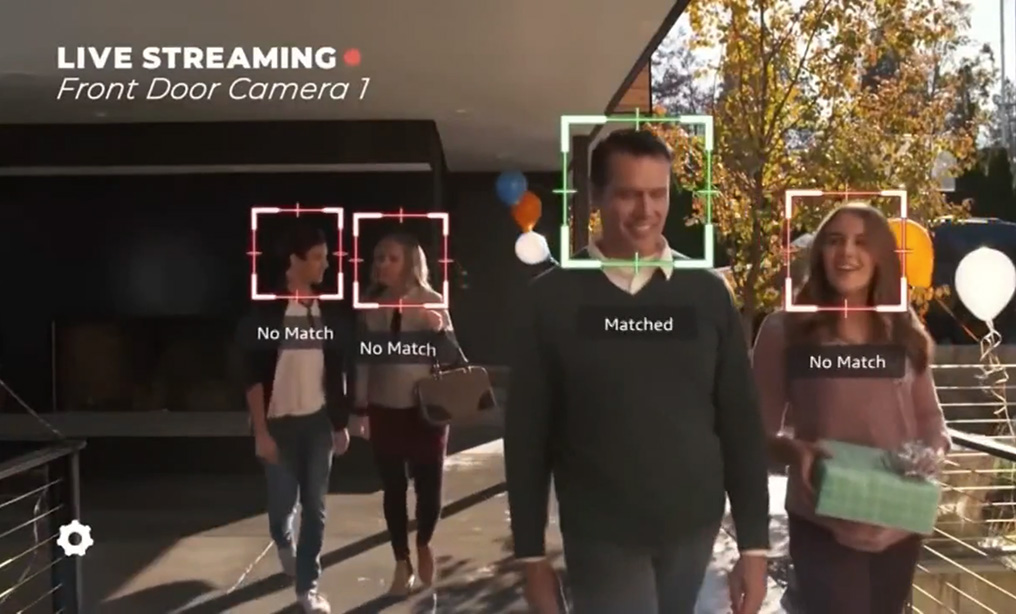

Facial matching system AEGIS was designed specifically for schools by a Canadian company SN Technologies. (YouTube)

Facial matching system AEGIS was designed specifically for schools by a Canadian company SN Technologies. (YouTube)

Lockport, New York's planned use of facial recognition technology in its public schools continues to generate controversy.

The district acquired the technology, including the AEGIS facial matching system, last year, according to the New York Civil Liberties Union.

Last week, a NYCLU representative told Legaltech News that after the group objected “to deploying this surveillance technology without any privacy policy in place, the Lockport School District drafted a privacy policy, and the state education department has called for a privacy assessment.”

School officials could not be reached for comment by Legaltech News about their plans for the technology. School Superintendent Michelle Bradley told The Lockport Union-Sun & Journal in late December that the district had not decided when to implement its facial recognition software.

But the NYCLU appears to be continually scrutinizing the board's plan on the use of the technology.

“We are exploring legislative options,” the NYCLU representative said when asked about the group's next move. “New York state currently has no regulations to govern the use of this technology. The New York State Education Department and the state Legislature should prohibit its use in public schools so that students are not subjected to this invasive surveillance technology.”

Beyond the price tag, the NYCLU was concerned about such issues as:

- Once an image is captured and uploaded, the system can track the person's movements around the school over the previous 60 days;

- Students meeting with a counselor or school clinic staff will be put into the system;

- The technology is allegedly “notoriously inaccurate, especially when it comes to identifying women, young people and people of color,” the NYCLU has said; and

- Concerns over who will get access to the database.

“The Family Educational Rights and Privacy Act obligates schools to protect student educational records, and the use of this surveillance technology in schools raises serious questions about the readiness of schools to protect sensitive biometric information,” the NYCLU representative said.

In the school board's draft policy, it explains that Lockport is “responsible for protecting the overall safety and welfare of the district's students, staff, properties, and visitors as well as deter theft, violence and other criminal activity on District property.” The policy says it establishes “parameters for the operation of security systems and protection of privacy.”

The “input and maintenance of personally identifiable information in the district's security systems will be limited to individuals who present an immediate or potential threat to the safety of the school community,” the policy further explains. These may include students who have been suspended from school, staff who have been suspended or are on administrative leave, Level 2 or 3 sex offenders, and others.

Moreover, information from security systems “may be shared with law enforcement or other governmental authorities in response to an immediate threat or as required by law,” the policy adds.

The NYCLU is concerned that other school districts could also install such technology. One law professor, Andrew Guthrie Ferguson, who teaches at the University of the District of Columbia, is raising similar concerns.

“Facial recognition technology in schools is an example of 'security theater' gone wrong,” Ferguson told Legaltech News. “Not only will the technology be largely ineffective, but the … cost of spending that money on cameras and software rather than teachers and students borders on unconscionable. We all want schools to be safe, but to take advantage of the fear from recent school shootings and other tragedies to sell surveillance against students is not in anyone's interest.”

Such technology “is not ready for prime time,” Ferguson added. “The number of false matches, incomplete identifications, and other problems is large enough that school districts should demand large-scale testing before any purchase. And, worse, the companies know about the limitations but are using students as their training data to improve the computer models.”

In addition, Ferguson says because the system is aimed mostly at students, “I would be worried about how the images will be used in addition to school safety,” Ferguson added. “Who owns the data? What can be done with the student images? Companies know that facial recognition matches are the identifications of the future. Once you have an image and a name, you can track people in many more places. … So, I would be very cautious of having young people—many not legally able to consent—give up their images to companies and schools to monetize their data.”

NYCLU education counsel Stefanie Coyle said in a statement to Legaltech News, “The Lockport City School District should not have spent millions of dollars on invasive technology that will not make schools safer.”

“The draft policy—which should have been created with public input before the technology was installed—has no meaningful limits on sharing facial recognition information with law enforcement or 'governmental authorities,' which may cause some parents to be fearful about sending their children to school,” she said. “It establishes no protocols to guard against harm if the system produces a false match, and in too many situations it would allow the data to be stored longer than the proposed 60 days. The Lockport School District should allow for public comment on this policy, and take seriously the concerns of parents, students and community members before this kind of notoriously inaccurate and biased surveillance technology is let loose on children.”

This content has been archived. It is available through our partners, LexisNexis® and Bloomberg Law.

To view this content, please continue to their sites.

Not a Lexis Subscriber?

Subscribe Now

Not a Bloomberg Law Subscriber?

Subscribe Now

NOT FOR REPRINT

© 2025 ALM Global, LLC, All Rights Reserved. Request academic re-use from www.copyright.com. All other uses, submit a request to [email protected]. For more information visit Asset & Logo Licensing.

You Might Like

View AllTrending Stories

- 1Settlement Allows Spouses of U.S. Citizens to Reopen Removal Proceedings

- 2CFPB Resolves Flurry of Enforcement Actions in Biden's Final Week

- 3Judge Orders SoCal Edison to Preserve Evidence Relating to Los Angeles Wildfires

- 4Legal Community Luminaries Honored at New York State Bar Association’s Annual Meeting

- 5The Week in Data Jan. 21: A Look at Legal Industry Trends by the Numbers

Who Got The Work

J. Brugh Lower of Gibbons has entered an appearance for industrial equipment supplier Devco Corporation in a pending trademark infringement lawsuit. The suit, accusing the defendant of selling knock-off Graco products, was filed Dec. 18 in New Jersey District Court by Rivkin Radler on behalf of Graco Inc. and Graco Minnesota. The case, assigned to U.S. District Judge Zahid N. Quraishi, is 3:24-cv-11294, Graco Inc. et al v. Devco Corporation.

Who Got The Work

Rebecca Maller-Stein and Kent A. Yalowitz of Arnold & Porter Kaye Scholer have entered their appearances for Hanaco Venture Capital and its executives, Lior Prosor and David Frankel, in a pending securities lawsuit. The action, filed on Dec. 24 in New York Southern District Court by Zell, Aron & Co. on behalf of Goldeneye Advisors, accuses the defendants of negligently and fraudulently managing the plaintiff's $1 million investment. The case, assigned to U.S. District Judge Vernon S. Broderick, is 1:24-cv-09918, Goldeneye Advisors, LLC v. Hanaco Venture Capital, Ltd. et al.

Who Got The Work

Attorneys from A&O Shearman has stepped in as defense counsel for Toronto-Dominion Bank and other defendants in a pending securities class action. The suit, filed Dec. 11 in New York Southern District Court by Bleichmar Fonti & Auld, accuses the defendants of concealing the bank's 'pervasive' deficiencies in regards to its compliance with the Bank Secrecy Act and the quality of its anti-money laundering controls. The case, assigned to U.S. District Judge Arun Subramanian, is 1:24-cv-09445, Gonzalez v. The Toronto-Dominion Bank et al.

Who Got The Work

Crown Castle International, a Pennsylvania company providing shared communications infrastructure, has turned to Luke D. Wolf of Gordon Rees Scully Mansukhani to fend off a pending breach-of-contract lawsuit. The court action, filed Nov. 25 in Michigan Eastern District Court by Hooper Hathaway PC on behalf of The Town Residences LLC, accuses Crown Castle of failing to transfer approximately $30,000 in utility payments from T-Mobile in breach of a roof-top lease and assignment agreement. The case, assigned to U.S. District Judge Susan K. Declercq, is 2:24-cv-13131, The Town Residences LLC v. T-Mobile US, Inc. et al.

Who Got The Work

Wilfred P. Coronato and Daniel M. Schwartz of McCarter & English have stepped in as defense counsel to Electrolux Home Products Inc. in a pending product liability lawsuit. The court action, filed Nov. 26 in New York Eastern District Court by Poulos Lopiccolo PC and Nagel Rice LLP on behalf of David Stern, alleges that the defendant's refrigerators’ drawers and shelving repeatedly break and fall apart within months after purchase. The case, assigned to U.S. District Judge Joan M. Azrack, is 2:24-cv-08204, Stern v. Electrolux Home Products, Inc.

Featured Firms

Law Offices of Gary Martin Hays & Associates, P.C.

(470) 294-1674

Law Offices of Mark E. Salomone

(857) 444-6468

Smith & Hassler

(713) 739-1250