Law Librarians Push for Analytics Tools Improvement After Comparative Study

A group of four law firm library directors walked through the results of the study—one that found no winner, but a number of issues and potential improvements for current analytics platforms.

July 15, 2019 at 12:52 PM

6 minute read

Within the past few years alone, data analytics capabilities for law has exploded—and so has the software offerings providing those analytics. So how does a law firm or academic law library decide between platforms?

A group of law librarians at the American Association of Law Libraries (AALL) ventured to find out with a study of their own. At the Monday “The Federal and State Court Analytics Market—Should the Buyer Beware? What's on the Horizon?” session, a group of four law firm library directors walked a packed room through the results of the study—one that found no winner, but a number of issues and potential improvements for current analytics platforms.

Diana Koppang, director of research and competitive intelligence at Neal, Gerber & Eisenberg, explained that the study analyzed seven platforms focused on federal courts: Bloomberg Law, Docket Alarm Analytics Workbench, Docket Navigator, Lex Machina, Lexis Context, Monitor Suite, and Westlaw Edge. The study included 27 librarians from law firms and academic law libraries with each tester analyzing two platforms over the course of one month.

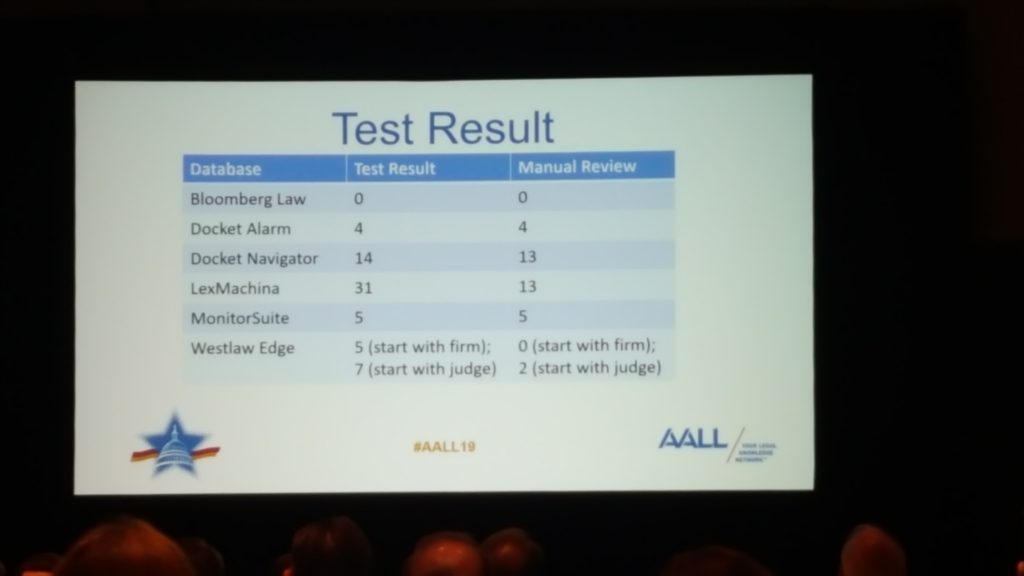

Each tester approached the platforms with a common set of 16 “real world” questions, developed by a panel of four librarians in conjunction with attorneys, to try and find answers. One example is the first question, which asked, “In how many cases has Irell & Manella appeared in front of Judge Richard Andrews in the District of Delaware?” with further constraints of intellectual property and dockets. These can be found on the AALL website.

And in speaking of that first question, the answer revealed a key problem with comparing the tools: there was little consistency between the platforms. The actual answer to the problem was 13 cases, explained Tanya Livshits, director of research services at Irell & Manella. In this example, not a single platform answered 13 cases.

Results courtesy of AALL. Photo by Zach Warren/ALM.

Results courtesy of AALL. Photo by Zach Warren/ALM.The problems with each of the platforms differed: Bloomberg had issues with the IP aspects of the search, Docket Navigator and Lex Machina had false hits for attorneys who had left, Monitor Suite focused more on opinions rather than dockets, and Westlaw Edge automatically filtered for the top 100 results, of which Irell & Manella was not one.

This is not to say that analytics programs aren't helpful. In fact, identifying some cases up front can be a good way to kick-start the search. It's just that these platforms shouldn't be an end-all for research, Livshits said.

“This just proves that while analytics are a great places to start, you need to dive deeper if you want to do something exact,” she explained. “A manual review is still so necessary.”

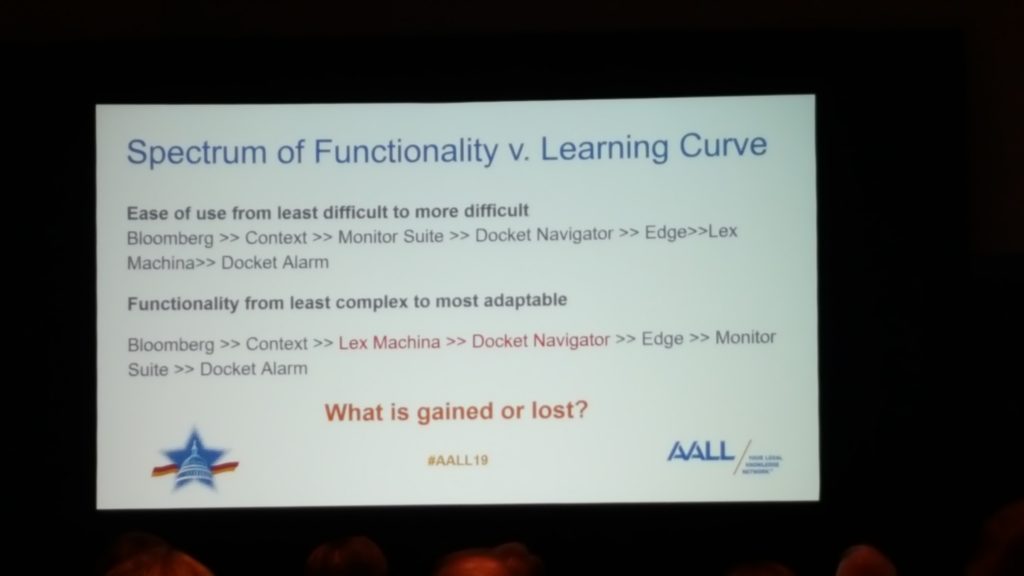

The study also sorted the companies by both functionality and complexity.

Results courtesy of AALL. Photo by Zach Warren/ALM.

Results courtesy of AALL. Photo by Zach Warren/ALM.And herein lies the second issue: In many cases, the librarians felt they weren't comparing apples to apples. Jeremy Sullivan, manager of competitive intelligence and analytics at DLA Piper, noted that Context is his go-to for expert witnesses, while Monitor Suite has focused more on competitive intelligence, with granular tagging and exhaustive filters and lists.

These differences are why the testers would not, and could not, declare that one platform is better than another, Koppang said. “So much depends on your use case; so much depends on your organization size. … There's so many factors that nobody can declare a winner,” she explained.

Kevin Miles, manager of library services at Norton Rose Fulbright, agreed, adding, “This is just our assumption, you may have a different idea. … What we're trying to do here is have all of the vendors have some improvement. We're not trying to burn anybody, we're trying to get people to improve.”

Ultimately, the goal is to have the assembled law librarians conduct their own similar tests, the panel said. Koppang said that those conducting a test should:

- Think about your use case (practice area, key users) prior to deciding what and how to test;

- Record date and time of search, which is key for comparing results;

- Use real-world examples;

- Detail search strategy (date ranges, steps taken, outside resources); and

- Remember to capture images and export data.

Conducting these tests may not only provide key insights into selecting a platform, but also serve another purpose: helping the law librarians understand the full capabilities of the platforms. “A lot of the testers emailed me and said, 'This is a really great training exercise,' which we didn't really think of it like that,” Koppang added.

Similarly, the testers offered a few insights for the platforms they tested. Among these are flexibility and a need to train those who are training others. Miles offered a few proposals to improve learning, including additional short videos on Vimeo or Youtube, additional short PDF training documents, pre-set searches with buttons or check boxes to combine features, and the ability to mouse-over specific words to reveal search strategy reminders.

Koppang added, “They're trying to explain every single piece and module they have, and they're overwhelmed.”

The panel also hammered home one point throughout the presentation: the need for transparency. Especially considering the issues with specific searches such as those in Question 1, the panelists (and those asking questions in the audience) reiterated that they need to know the platform's limitations in order to properly inform the attorney.

If something goes wrong without that information, Livshits said, “You're going to lose the trust of both the librarian and the attorney, and it's tough to get the trust of the attorney back.”

Finally, Sullivan suggested that for smaller firms, analytics platforms can do a better job of combining and offering features. In particular, he pointed to companies that said they don't offer a feature because it's in a “sister platform.”

“Many of you are content to say you can't be all things to all people,” Sullivan said. “Well, I would say you're not trying.”

This content has been archived. It is available through our partners, LexisNexis® and Bloomberg Law.

To view this content, please continue to their sites.

Not a Lexis Subscriber?

Subscribe Now

Not a Bloomberg Law Subscriber?

Subscribe Now

NOT FOR REPRINT

© 2025 ALM Global, LLC, All Rights Reserved. Request academic re-use from www.copyright.com. All other uses, submit a request to [email protected]. For more information visit Asset & Logo Licensing.

You Might Like

View AllTrending Stories

Who Got The Work

J. Brugh Lower of Gibbons has entered an appearance for industrial equipment supplier Devco Corporation in a pending trademark infringement lawsuit. The suit, accusing the defendant of selling knock-off Graco products, was filed Dec. 18 in New Jersey District Court by Rivkin Radler on behalf of Graco Inc. and Graco Minnesota. The case, assigned to U.S. District Judge Zahid N. Quraishi, is 3:24-cv-11294, Graco Inc. et al v. Devco Corporation.

Who Got The Work

Rebecca Maller-Stein and Kent A. Yalowitz of Arnold & Porter Kaye Scholer have entered their appearances for Hanaco Venture Capital and its executives, Lior Prosor and David Frankel, in a pending securities lawsuit. The action, filed on Dec. 24 in New York Southern District Court by Zell, Aron & Co. on behalf of Goldeneye Advisors, accuses the defendants of negligently and fraudulently managing the plaintiff's $1 million investment. The case, assigned to U.S. District Judge Vernon S. Broderick, is 1:24-cv-09918, Goldeneye Advisors, LLC v. Hanaco Venture Capital, Ltd. et al.

Who Got The Work

Attorneys from A&O Shearman has stepped in as defense counsel for Toronto-Dominion Bank and other defendants in a pending securities class action. The suit, filed Dec. 11 in New York Southern District Court by Bleichmar Fonti & Auld, accuses the defendants of concealing the bank's 'pervasive' deficiencies in regards to its compliance with the Bank Secrecy Act and the quality of its anti-money laundering controls. The case, assigned to U.S. District Judge Arun Subramanian, is 1:24-cv-09445, Gonzalez v. The Toronto-Dominion Bank et al.

Who Got The Work

Crown Castle International, a Pennsylvania company providing shared communications infrastructure, has turned to Luke D. Wolf of Gordon Rees Scully Mansukhani to fend off a pending breach-of-contract lawsuit. The court action, filed Nov. 25 in Michigan Eastern District Court by Hooper Hathaway PC on behalf of The Town Residences LLC, accuses Crown Castle of failing to transfer approximately $30,000 in utility payments from T-Mobile in breach of a roof-top lease and assignment agreement. The case, assigned to U.S. District Judge Susan K. Declercq, is 2:24-cv-13131, The Town Residences LLC v. T-Mobile US, Inc. et al.

Who Got The Work

Wilfred P. Coronato and Daniel M. Schwartz of McCarter & English have stepped in as defense counsel to Electrolux Home Products Inc. in a pending product liability lawsuit. The court action, filed Nov. 26 in New York Eastern District Court by Poulos Lopiccolo PC and Nagel Rice LLP on behalf of David Stern, alleges that the defendant's refrigerators’ drawers and shelving repeatedly break and fall apart within months after purchase. The case, assigned to U.S. District Judge Joan M. Azrack, is 2:24-cv-08204, Stern v. Electrolux Home Products, Inc.

Featured Firms

Law Offices of Gary Martin Hays & Associates, P.C.

(470) 294-1674

Law Offices of Mark E. Salomone

(857) 444-6468

Smith & Hassler

(713) 739-1250